The Communication Gap in GenAI Risk Management

In the rapidly evolving landscape of Generative AI, communicating risks effectively to executives requires more than technical explanations. This disconnect is most visible when technical teams raise red flags while business leaders see only green lights for innovation.

Imagine your organization is rolling out a member-facing GenAI chatbot, with leadership excited about the potential revenue and productivity gains. When an executive responds to concerns about AI hallucinations with “How is that different from humans making mistakes?“, technical teams find themselves struggling to articulate why GenAI risks are fundamentally different and why traditional risk management approaches may be insufficient for these new technologies.

Through my experience leading GenAI security at a major financial technology company, I’ve seen how structured communication turns executive skepticism into action. This framework doesn’t just raise awareness—it enables informed decision-making and proactive governance. When implemented effectively, this approach establishes guardrails that protect the organization while enabling the innovation that executives rightfully prioritize.

What You’ll Learn

- A structured framework for translating technical GenAI risks into executive-relevant concerns

- Specific language and examples for each risk category that resonates with business leaders

- Practical meeting strategies for presenting these risks effectively

- Implementation approaches that balance innovation with appropriate safeguards

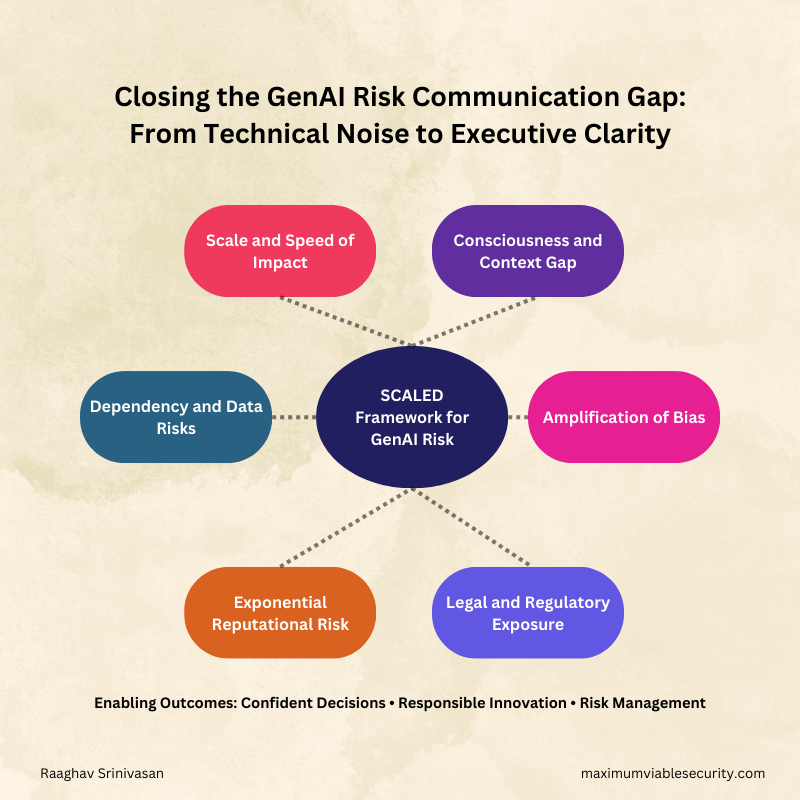

The SCALED Framework for GenAI Risk Communication

The SCALED framework provides six essential dimensions that differentiate AI risks from traditional technology risks. For each dimension, I’ve included executive-friendly language and specific examples to help translate technical concerns into business impact.

S – Scale and Speed of Impact

Why Executives Should Care: Unlike human errors, GenAI mistakes can instantly affect your entire customer base before manual oversight can intervene.

Unlike human errors, GenAI mistakes can be:

- Deployed across thousands or millions of interactions simultaneously

- Propagated at machine speed without human intervention

- Replicated across multiple channels before detection

Translation Tip: Frame this in terms of customer impact multipliers and response time requirements.

Executive-Friendly Example: “While a human customer service rep might misinform one customer, our AI assistant could potentially provide incorrect information to 10,000 customers in minutes. Our current incident response process is designed for human-speed errors, not machine-scale issues.”

Real-World Case Study: In 2023, the FTC took action against DoNotPay for its AI chatbot providing inaccurate legal advice to consumers, underscoring how AI-generated errors can rapidly affect a large user base. (FTC Press Release)

C – Consciousness and Context Gap

Why Executives Should Care: GenAI systems lack the intuitive understanding of company values and situational awareness that human employees develop.

GenAI systems:

- Lack human consciousness and moral awareness

- Cannot recognize when they’re in ethically sensitive situations

- Miss contextual cues that humans intuitively understand

- Don’t experience the consequences of their mistakes

Translation Tip: Connect this to training and judgment capabilities that executives value in human team members.

Executive-Friendly Example: “Unlike employees who understand our company values and can recognize sensitive topics, our AI needs explicit guardrails for every scenario we can anticipate. It’s like having a new employee who never improves their judgment regardless of how many times you provide feedback.”

A – Amplification of Bias

Why Executives Should Care: Systematic bias in AI can create immediate compliance issues and long-term brand damage that scales with usage.

GenAI can:

- Systematically amplify existing biases in training data

- Scale discriminatory patterns undetected

- Create feedback loops that reinforce problematic outputs

Translation Tip: Connect bias concerns to concrete business risks like market segmentation issues and diversity goals.

Executive-Friendly Example: “If our hiring AI develops a bias, it won’t just affect one candidate—it could systematically disadvantage entire groups across all hiring decisions. This creates both immediate legal exposure and undermines our diversity initiatives at scale.”

Real-World Case Study: In 2024, Google’s GenAI image generator, Gemini, faced backlash for misrepresenting historical figures by altering their racial depictions. The issue led to public criticism and forced Google to take the tool offline for adjustments. (Article on TheGuardian)

L – Legal and Regulatory Exposure

Why Executives Should Care: GenAI operates in a rapidly evolving regulatory landscape where compliance requirements are increasing and penalties can be severe.

GenAI deployment creates:

- Novel compliance challenges across multiple jurisdictions

- Rapidly evolving regulatory requirements

- Potential liability without established case law

- Data privacy implications at scale

Translation Tip: Frame in terms of regulatory uncertainty and the pace of legal change in AI governance.

Executive-Friendly Example: “We’re operating in a regulatory environment that’s still taking shape, with potential fines that scale with the number of affected users. The EU’s AI Act and similar regulations in development create compliance requirements that don’t exist for traditional software.”

Real-World Case Study: The SEC penalized investment firms Delphia and Global Predictions for misrepresenting AI capabilities, showcasing how AI governance failures can lead to legal repercussions. (Mayer Brown Analysis)

E – Exponential Reputational Risk

Why Executives Should Care: GenAI failures can create viral brand damage at unprecedented speed and scale.

GenAI failures can lead to:

- Viral spread of AI mistakes on social media

- Brand damage that scales with user base

- Difficult-to-control narrative once released

- Long-term trust erosion across products

Translation Tip: Connect to brand value and customer trust metrics the executive team already tracks.

Executive-Friendly Example: “A single problematic AI interaction can be screenshot, shared millions of times, and become the defining narrative about our technology—affecting not just this product but our entire brand. Unlike human errors that might affect individual customers, AI failures can instantly become representative of our entire company approach.”

Real-World Case Study: AI-generated misinformation in chatbots has led to viral public backlash, such as Microsoft’s Tay chatbot, which was shut down within 24 hours after producing offensive content. (BBC Report)

D – Dependency and Data Risks

Why Executives Should Care: GenAI systems create new operational dependencies and data vulnerabilities that can affect business continuity, security posture, and compliance obligations.

GenAI creates unique dependency and data risks:

- Reliance on third-party foundation models with limited transparency

- Potential for protected data exposure through model outputs

- New attack vectors like prompt injection and model poisoning

- Complex data lineage challenges for AI-generated content

Translation Tip: Connect these risks to existing concerns about vendor management, data protection, and business continuity that executives already understand.

Executive-Friendly Example: “While traditional software operates predictably based on our specifications, GenAI systems may change behavior unexpectedly when their underlying models are updated by providers. Additionally, these systems can inadvertently leak sensitive information in ways that bypass traditional data loss prevention controls, creating compliance exposures we can’t easily detect with current monitoring.”

Real-World Case Study: In 2024, a Disney employee unknowingly downloaded an AI tool laced with malware, leading to a massive data breach. Hackers accessed 44 million internal Slack messages, exposing sensitive customer and employee data. The breach underscored the risks of unauthorized AI tool usage and third-party dependencies. In response, Disney discontinued Slack for internal communications. (National CIO Review)

Meeting Structure: Presenting SCALED to Executives

When preparing to present the SCALED framework to executives, structure your presentation to maximize impact:

- Start with business alignment (5 minutes)

- Acknowledge the business goals for GenAI adoption

- Express support for innovation with appropriate guardrails

- Set the tone: “This isn’t about whether to implement, but how to do it safely”

- Present SCALED dimensions with examples (15 minutes)

- Select the 2-3 most relevant SCALE dimensions for your specific implementation

- For each, provide a concrete example relevant to your industry

- Share results from red teaming exercises that illustrate these dimensions

- Include both risk scenarios and mitigation approaches

- Connect to existing business frameworks (5 minutes)

- Link to existing risk management processes

- Compare to familiar risks in a new context

- Position GenAI governance as an extension of existing practices

- Close with actionable recommendations (5 minutes)

- Suggest specific governance approaches

- Propose metrics to monitor

- Outline resource requirements

Executive Translation Guide: Technical to Business Language

When communicating with executives, replacing technical jargon with business-focused language dramatically improves understanding and action:

| Technical Term | Executive-Friendly Alternative | Why It Works Better |

| “The model might hallucinate” | “The system can generate convincing misinformation at scale” | Conveys both the nature and business impact of the problem |

| “We need more robust prompt engineering” | “We need clearer operational boundaries for the AI” | Frames the solution in terms of business controls rather than technical implementation |

| “The transformer architecture limitations” | “The system’s inherent design constraints” | Simplifies the concept while preserving the core limitation message |

| “Token-level optimization” | “Precision improvements in system responses” | Connects technical improvement to customer-facing outcomes |

Using this translation approach keeps discussions focused on business implications rather than technical details, making it easier for executives to make informed decisions.

Implementing the Framework

When communicating GenAI risks to executives, these practical approaches will help translate technical concerns into business priorities:

- Start with business outcomes, not technical details

- Begin with how risk governance supports business goals

- Position guardrails as enablers of sustainable innovation

- Align with strategic initiatives already on the executive agenda

- Quantify potential impact whenever possible

- Users potentially affected by a failure scenario

- Response time required for containment

- Estimated financial and reputational exposure

- Implementation costs for suggested guardrails

- Use concrete examples relevant to your industry

- Reference incidents from competitors or adjacent industries

- Create “day in the life” scenarios of risk materialization

- Develop comparison points to familiar technologies

- Present risk mitigation options alongside each concern

- Propose specific monitoring approaches

- Suggest governance structures and roles

- Outline testing protocols before deployment

- Create incident response playbooks specific to GenAI

- Connect to strategic objectives like market leadership or customer trust

- Show how appropriate risk management supports brand differentiation

- Position robust GenAI governance as a competitive advantage

- Link risk mitigation to customer experience consistency

Executive-Specific Approaches

Different executives may respond to different aspects of the SCALED framework. The following approaches are illustrative and should be tailored to your organization’s specific structure, culture, and strategic priorities:

For CEOs: Focus on reputation risk and market leadership through responsible innovation.

For CFOs: Emphasize quantifiable risk exposure, compliance costs, and risk-adjusted return on AI investments.

For CIOs/CTOs: Connect GenAI governance to existing technology risk frameworks while highlighting unique differences.

For CMOs: Concentrate on brand protection, customer trust, and experience consistency.

For CLOs (Chief Legal Officers): Detail evolving regulatory landscapes and proactive compliance approaches.

Remember that executive concerns and priorities vary significantly across industries and organizational cultures. The most effective approach will align with your specific executive team’s communication style and strategic focus areas.

Conclusion

By framing GenAI risks through the SCALED lens, you transform vague technical concerns into business-critical considerations that executives can understand, prioritize, and address. The most successful GenAI implementations aren’t those that avoid all risks—they’re the ones where technical and business leaders establish a shared understanding of the unique risk profile these technologies present, and collaborate on appropriate governance approaches.

This balanced approach protects both your organization and its customers.

Join the Conversation

Struggling to get executives to take GenAI risks seriously? Share your biggest challenges—or success stories—in bridging the technical-executive gap. Let’s build a playbook for responsible GenAI adoption together.

Looking for personalized guidance? Connect with me on LinkedIn to discuss how the SCALED framework might be adapted for your specific organizational needs.

Together, we can build a community of practice around responsible GenAI implementation that balances innovation with appropriate risk management.